Neurostats Digest #6

Blood biomarkers, responders as a causal construct, low vs. high risk use-cases of biomarkers

As every disease area pins its hopes on blood biomarkers, it is instructive to see what these tests are actually being used for in Alzheimer’s.

It strikes me that the current usage of blood biomarkers in dementia will be a crutch that is immensely helpful for patients and clinicians to take patient’s future risk of dementia seriously. Patients can easily initiate conversations about it; tests become a re-imburseable expense and so forth.

From a technological standpoint, we are not doing a more precise job of measuring dementia risk, and we are nowhere closer to capturing a more narrow biological process that better differentiates heterogeneous paths to developing dementia. The scope of equivalence between blood-biomarker based decisions and PET imaging based decisions is still narrow. The utility is primarily in cost-reduction without reducing accuracy, which is a big deal in terms of accessibility.

In the long run, we don’t yet know the price we will pay in terms of causal specificity for substituting the tissue of interest with blood and how that intersects with a wide swarth of the general population being measured instead of a targeted population.

The recommendations in the new CPG — both of which apply only to patients with cognitive impairment being seen in specialized care for memory disorders — are:

BBM tests with ≥90% sensitivity and ≥75% specificity can be used as a triaging test, in which a negative result rules out Alzheimer’s pathology with high probability. A positive result should also be confirmed with another method, such as a cerebral spinal fluid (CSF) or amyloid positron emission tomography (PET) test.

BBM tests with ≥90% for both sensitivity and specificity can serve as a substitute for PET amyloid imaging or CSF Alzheimer’s biomarker testing.

“Not all BBM tests have been validated to the same standard or tested broadly across patient populations and clinical settings, yet patients and clinicians may assume these tests are interchangeable,” said alz.org/press/spokespeo…, Alzheimer’s Association vice president of scientific engagement and a co-author of the guideline. “This guideline helps clinicians apply these tools responsibly, avoid overuse or inappropriate use, and ensure that patients have access to the latest scientific advancements.”

Box’s “Improving almost anything” applies to dieting too.

What would reduce the friction to actually implementing optimal experimental designs dynamically for personal health experiments?

In reference to: “Optimal experimental design is difficult. I’ve usually tried only one new food at a time. In retrospect, this was not optimal, especially near the beginning of the process. If you eat five foods you are unsure of, wait a week, and get no symptoms, then all five are fine. If you get sick, at least one of them was a trigger, but you don’t know which one, and it may have been more than one. Then you could try two of them at a time, and so on. Proceeding this way would get you more information faster, at an upfront cost of being more sick more often. There’s whole subfields of statistical theory devoted to optimizing this sort of process. For a certain sort of geek, using that would be a lot of fun. I am that sort of geek! But it didn’t occur to me to try this until a year into the process.3 And there are several reasons it would be difficult. So I still haven’t done i”

Today: Research syntheses, quantitative systematic reviews, position papers usually take all previous reported research articles at face value.

Future: A research synthesis will dynamically re-analyze all prior work to make as both as relevant to the question at hand and as accurate given all that has been discovered since the original publication.

*Individual patient data re-analysis is done for certain kinds of meta-analyses but very restricted to clinical trials, usually same drug-outcome combination. Nobody does this for larger scale integration of different types of studies and levels of evidence.

If everyone is following the hottest trends written up in 5 year strategic research plans, it might definitely be important, but it is also mainstream.

In reference to: “It can be easy to delude yourself into thinking that you are doing something radical and creative as an expression of your own deep interests, when in fact you are doing what everybody around you is doing.”

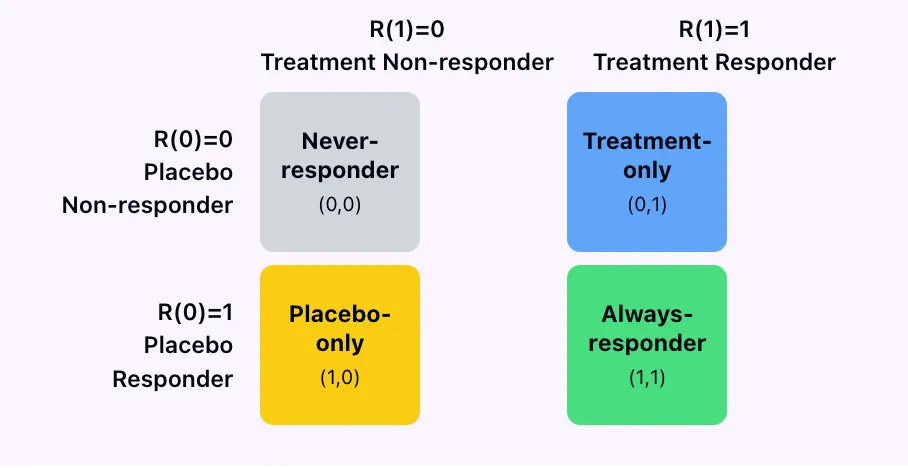

The 4 types of patients we can only distinguish in Lake Wobegon,

where all counterfactuals are fixed, (no noise)

no one has carry-over, (no history)

and

every patient is helpfully deterministic (stable individual effects)

The 4 types of patients we can only distinguish in Lake Wobegon- II

In practice, you cannot use data from a standard RCT to classify patients into these 4 buckets because we only ever observe outcome under placebo, R(0) or outcome under treatment R(1) but never both at the same time.

Always responders and treatment responders can’t be distinguished

Always responders and placebo responders can’t be distinguished.

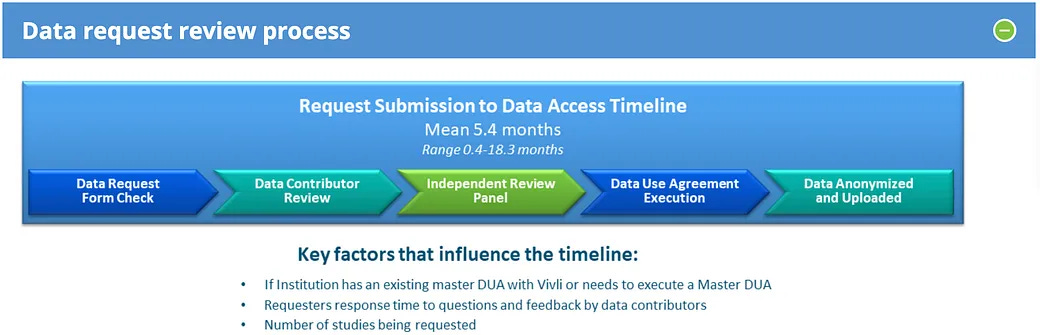

Why is legally hard to share clinical trial data when companies like EPIC can just strip personal information and re-sell it to so many other people?

5 months to get access to clinical trial data on VIVLI (likely more if it is multiple trials)

whereas NIH repos that I will not name just have the de-identified data downloadable on their website with a simple data use agreement!

Gobburu (2009) points out that biomarkers can act as surrogates for drug development decisions without having to be surrogates for regulatory approval. The scientific concerns of surrogacy remain the same but the degree of accuracy / risk-reward will be different.

Changes in fasting plasma glucose or international normalized ratio (INR) are good surrogates for dose selection but not for regulatory approval.

Caveat: A lot has changed since 2009 since we expect many more tools for evaluating drugs go through a qualification process now.

The vast majority of neuroimaging studies that estimate heritability of various biomarkers use kinship/pedigree or twin study estimates.

In reference to: “Kinship-based models therefore provide us with a mushy estimate of narrow-sense heritability plus an unbounded amount of environmental confounding”